Learn all about the Sigmoid Activation Function, its properties, applications, and benefits. Discover how this popular mathematical function is used in neural networks, machine learning, and deep learning algorithms.

Introduction

The Sigmoid Activation Function is a fundamental concept in the field of artificial intelligence and machine learning. It plays a crucial role in shaping the behavior of neural networks, allowing them to model complex and non-linear relationships between inputs and outputs. In this comprehensive guide, we will explore the ins and outs of the Sigmoid Activation Function, its applications, advantages, and potential drawbacks. Whether you are a seasoned data scientist or a curious learner, this article will provide you with valuable insights into the world of Sigmoid Activation Functions.

Sigmoid Activation Function: Explained

At its core, the Sigmoid Activation Function is a mathematical function that maps any real value to a range between 0 and 1. It is represented by the formula:

�(�)=11+�−�

σ(x)=

1+e

−x

1

where

�

x is the input to the function, and

�(�)

σ(x) is the output, which is always constrained between 0 and 1. This property makes it particularly useful in applications where we need to introduce non-linearity in the neural network’s activation.

The term “sigmoid” refers to the S-shaped curve that the function produces, which resembles the letter “S.” This unique shape gives the function its name and defines its behavior as it processes input data.

The Importance of Sigmoid Activation Function in Neural Networks

In neural networks, the Sigmoid Activation Function plays a vital role in introducing non-linearity to the model. Non-linearity is essential because most real-world data is not linearly separable, meaning it cannot be classified using a straight line or a linear equation.

-

Non-Linearity and Neural Networks

Neural networks consist of multiple interconnected layers, each containing nodes or artificial neurons. These neurons process data and pass it along to the next layer. The introduction of non-linearity in the activation function allows neural networks to approximate highly complex functions, making them capable of solving intricate problems. -

Activation Functions in Neural Networks

Activation functions define the output of individual neurons based on their input. While there are various activation functions, the Sigmoid Activation Function is preferred in certain scenarios due to its desirable properties, such as output range and smoothness. -

Binary Classification

Sigmoid functions are commonly used in binary classification tasks where the output needs to be either 0 or 1. For instance, in spam email detection, the Sigmoid Activation Function can help classify an email as spam (1) or not spam (0) based on certain features.

Applications of the Sigmoid Activation Function

The Sigmoid Activation Function finds applications in various fields due to its versatile nature and ability to handle binary classification tasks. Some of the key applications include:

-

Logistic Regression

Sigmoid functions are a natural fit for logistic regression models, where the goal is to classify data into discrete categories. The function assigns probabilities to each class, making it an ideal choice for logistic regression problems. -

Artificial Neural Networks

As mentioned earlier, Sigmoid Activation Functions are widely used in artificial neural networks. They are primarily used in the hidden layers of neural networks to introduce non-linearity and capture complex relationships in the data. -

Backpropagation Algorithm

The Sigmoid Activation Function’s smoothness and differentiability are essential for the backpropagation algorithm, which is a fundamental component of training neural networks. Backpropagation involves calculating gradients to optimize the network’s parameters, and the smoothness of the Sigmoid function facilitates this process.

Advantages of Using the Sigmoid Activation Function

The Sigmoid Activation Function offers several advantages that make it a popular choice in certain applications:

-

Output Range

The output of the Sigmoid function is always bounded between 0 and 1, which is particularly useful in scenarios where the model needs to provide a probability score or a binary classification. -

Smoothness

The function is continuous and smooth, allowing for gradient-based optimization techniques like stochastic gradient descent to be applied effectively during the training process. -

Interpretability

The Sigmoid function outputs probabilities, making it easier to interpret the model’s confidence in its predictions. -

Simple Derivative

The derivative of the Sigmoid function is relatively simple and can be easily calculated, which is crucial for optimization algorithms like backpropagation. -

Computationally Efficient

The computational cost of evaluating the Sigmoid function is relatively low, making it efficient for use in large-scale neural networks.

Drawbacks of the Sigmoid Activation Function

While the Sigmoid Activation Function has its merits, it is not without its drawbacks:

-

Vanishing Gradient Problem

One of the major limitations of the Sigmoid function is the vanishing gradient problem. As the function saturates, the gradient becomes close to zero, leading to slow convergence during training. -

Output Bias

The Sigmoid function tends to output values around 0.5 for a large range of input values. This can introduce bias in the predictions, especially if the data is imbalanced. -

Uncentered Output

The Sigmoid function outputs values that are not centered around zero, which can lead to convergence issues in certain optimization algorithms.

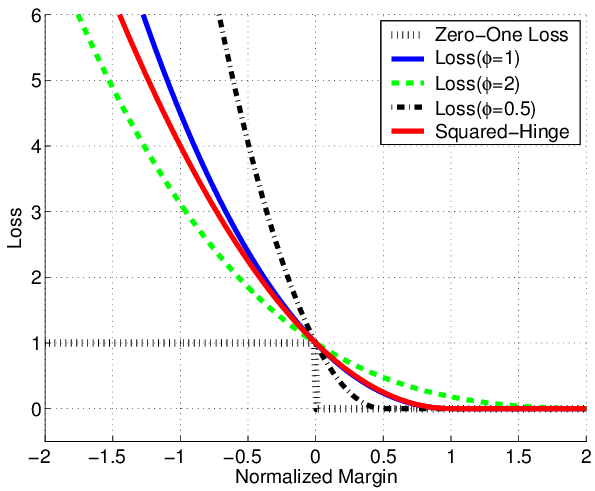

Sigmoid Activation Function vs. Other Activation Functions

To understand the strengths and weaknesses of the Sigmoid Activation Function better, it is essential to compare it with other popular activation functions:

-

ReLU (Rectified Linear Unit)

ReLU is another widely used activation function that overcomes the vanishing gradient problem by providing non-zero gradients for positive inputs. However, ReLU has its challenges, such as the “dying ReLU” problem, where some neurons become inactive during training. -

Tanh (Hyperbolic Tangent)

Tanh is similar to the Sigmoid function but has a range between -1 and 1. It can address the output bias problem associated with the Sigmoid function but still suffers from the vanishing gradient problem. -

Swish

Swish is a relatively new activation function that combines elements of both ReLU and the Sigmoid function. It offers improved performance in certain scenarios but may not be suitable for all types of neural networks. -

Leaky ReLU

Leaky ReLU is a variant of ReLU that addresses the “dying ReLU” problem. By introducing a small slope for negative inputs, it prevents neurons from becoming inactive during training.

Sigmoid Activation Function in Machine Learning Algorithms

The Sigmoid Activation Function is not only limited to neural networks but also finds its applications in various machine learning algorithms:

-

Logistic Regression

As mentioned earlier, logistic regression models use the Sigmoid function to map the output to a probability score, facilitating binary classification. -

Support Vector Machines (SVM)

In certain kernel functions used in SVM, the Sigmoid function is employed to introduce non-linearity in the decision boundary. -

Genetic Algorithms

In genetic algorithms, the Sigmoid function is used to map the continuous values of gene parameters to a range between 0 and 1.

FAQs

-

Can the Sigmoid Activation Function handle multi-class classification?

No, the Sigmoid function is limited to binary classification tasks. For multi-class classification, other activation functions like Softmax are more appropriate. -

How does the Sigmoid Activation Function mitigate the vanishing gradient problem?

While the Sigmoid function does not entirely solve the vanishing gradient problem, its smoothness ensures that the gradient remains non-zero in a significant portion of its input range, mitigating the issue to some extent. -

What are the alternatives to the Sigmoid Activation Function?

Some popular alternatives to the Sigmoid function include ReLU, Tanh, Swish, and Leaky ReLU, each with its own advantages and limitations. -

Is the Sigmoid Activation Function used in deep learning?

Yes, the Sigmoid Activation Function is commonly used in deep learning, particularly in shallow networks or for specific tasks like logistic regression. -

Does the Sigmoid Activation Function have applications outside of machine learning?

Yes, the Sigmoid function finds applications in other fields, such as biology, where it is used to model sigmoid growth curves. -

Can the Sigmoid Activation Function handle regression tasks?

While the Sigmoid function is commonly used for binary classification, it is not suitable for regression tasks, where the output can take continuous values.

Conclusion

In conclusion, the Sigmoid Activation Function is a fundamental tool in the world of artificial intelligence and machine learning. Its unique properties, such as the output range and smoothness, make it well-suited for certain applications. However, it is essential to consider its limitations, such as the vanishing gradient problem and output bias, when using it in specific contexts. Understanding the strengths and weaknesses of the Sigmoid function can help data scientists and machine learning practitioners make informed decisions when designing and training neural networks.

Remember, the choice of activation function depends on the specific problem at hand, and experimenting with different functions can lead to better model performance. As technology and research continue to evolve, we may witness the emergence of new and improved activation functions that further enhance the capabilities of artificial neural networks.

============================================